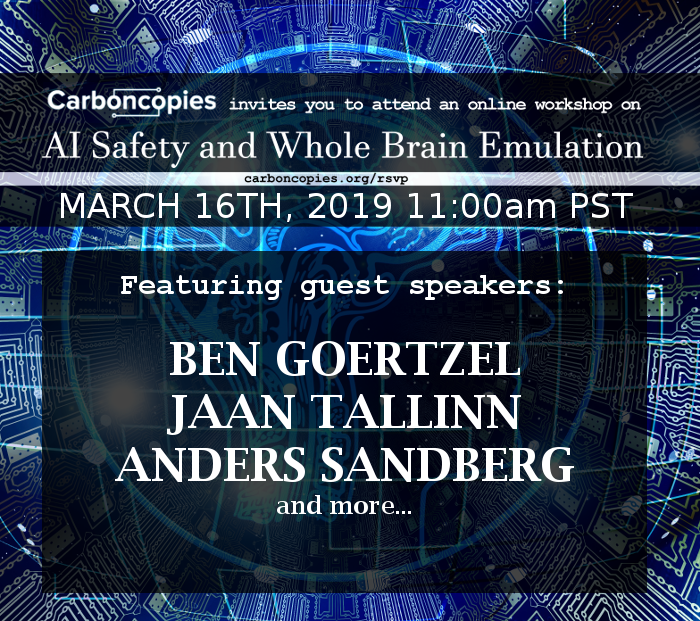

Carboncopies Workshop: Whole Brain Emulation and AI Safety¶

A written summary of the event by Dr. Keith Wiley is available at this link. The entire workshop is available on our Youtube channel. There you can find:

- The raw recording of the livestream (5+ hours) at this link.

- The playlist containing original (high resolution, clear audio) recorded interviews at this link.

- A transcript of the full workshop can be found at this link.

The workshop included audience participation

- Audience members were able to ask questions in writing by typing them into the chat of the Youtube livestream. A staff member was monitoring the chat.

- Audience members were able to interact directly with our expert guests by calling in via web or phone. (URL and phone number removed as the event has concluded.) A staff member was moderating the call-in bridge. When a question was selected, the caller’s microphone was activated to interact directly with our guests.

Many thanks to all who RSVPed!

On 2019-03-16, Carboncopies will host a Workshop on the topic of Whole Brain Emulation and AI Safety with the following main questions:

BCI: If you create a high bandwidth communication pathway between the brain of a biological human and advanced AI, how does that affect AI Safety?

Neuralink and others aim to create such BCI/BMI, and hope to improve the prospects for AI Safety. What is a high bandwidth interface (e.g. compared with rate of speech, or imagery, or percentage of neurons)? What is the predicted effect of such a connection on the human, what is the predicted effect on the AI, and why?

In the popular press, neural interfaces (BCI/BMI) are sometimes conflated with neural prosthesis. The human brain has approximately 83 billion neurons, and each neuron communicates at a typical maximum rate of about 100 spikes per second (a few can generate 1000 spikes per second). Human thought is based on processes such as pattern matching, emphasis (e.g. by up/down regulation), regularity (Hebbian learning). Machine thought is based on program execution, arithmetic, logic, or artificial (typically not spiking) neural networks.

WBETAKEOFF: If whole brain emulation is accomplished, and implemented on a suitable processing platform, will a whole brain emulation be able to rapidly self-improve in a manner akin to a supposed AI take-off scenario?

How would a human brain emulation be able to follow an engineered or predictable self-improvement curve? How would this compare with the notion of self-improving AI with a utility function or reinforcement learning?

An argument that is occasionally made to urge caution in the development of whole brain emulation is that WBE might itself become a problematic runaway AI, but the supposed scenario is not clarified.

AIACCEL: While carrying out research to accomplish whole brain emulation, what are the activities or types of insights that could risk accelerating advancements towards runaway self-improving AI?

In what way are these activities or types of insight different from those that may be generated in mainstream and general neuroscience?

Concern about acceleration of the risky AI improvement curve is sometimes mentioned as a possible reason for caution in the development of whole brain emulation, but the way in which that could happen is not clear. There are several specific features of AI development that are typically implicated in the possible runaway self-improvement scenarios, such as reinforcement learning, utility function following, (more?). Which of these might benefit from neuroscience or WBE research, and why?

MERGE: What does a human-machine merger through whole brain emulation look like, and can it improve AI Safety?

How may a substrate-independent human mind be linked or integrated with AI? What might this do to the human mind, and what might it do to the AI? How could that affect AI Safety, and why?

A human-machine merger is sometimes proposed as a solution to avoid a competitive race between humanity and AI. The scenarios envisioned can benefit from clarification.

TIME AGENDA ITEM

11:00 AM Welcome & Brief Overview of Workshop

11:05 AM Randal Koene speaks

11:30 AM Jaan Tallinn interview

12:15 PM Tallinn Q&A

12:30 PM Panel discussion

1:00 PM Panel Q&A

1:25 PM Anders Sandberg interview

2:30 PM Anders Sandberg Q&A + panel discussion

3:00 PM Ben Goertzel recorded talk

3:30 PM Q&A + panel discussion