The Preconscious Smart Home

Keith Wiley

Initiated 190601

“Computer, open a new email.”

“To whom?”

“My sister, with the subject line, ‘Plans for the weekend’.”

“What should the email say?”

“We’ll be heading to the Shakespeare festival on Saturday. Want to join us? We’ll probably also grab dinner afterwards.”

“The email has been sent.”

The spoken-word exchange shown above is possible today with a variety of technologies. Let’s try again:

“Compu—”

“The email has been sent.”

?! What just happened?

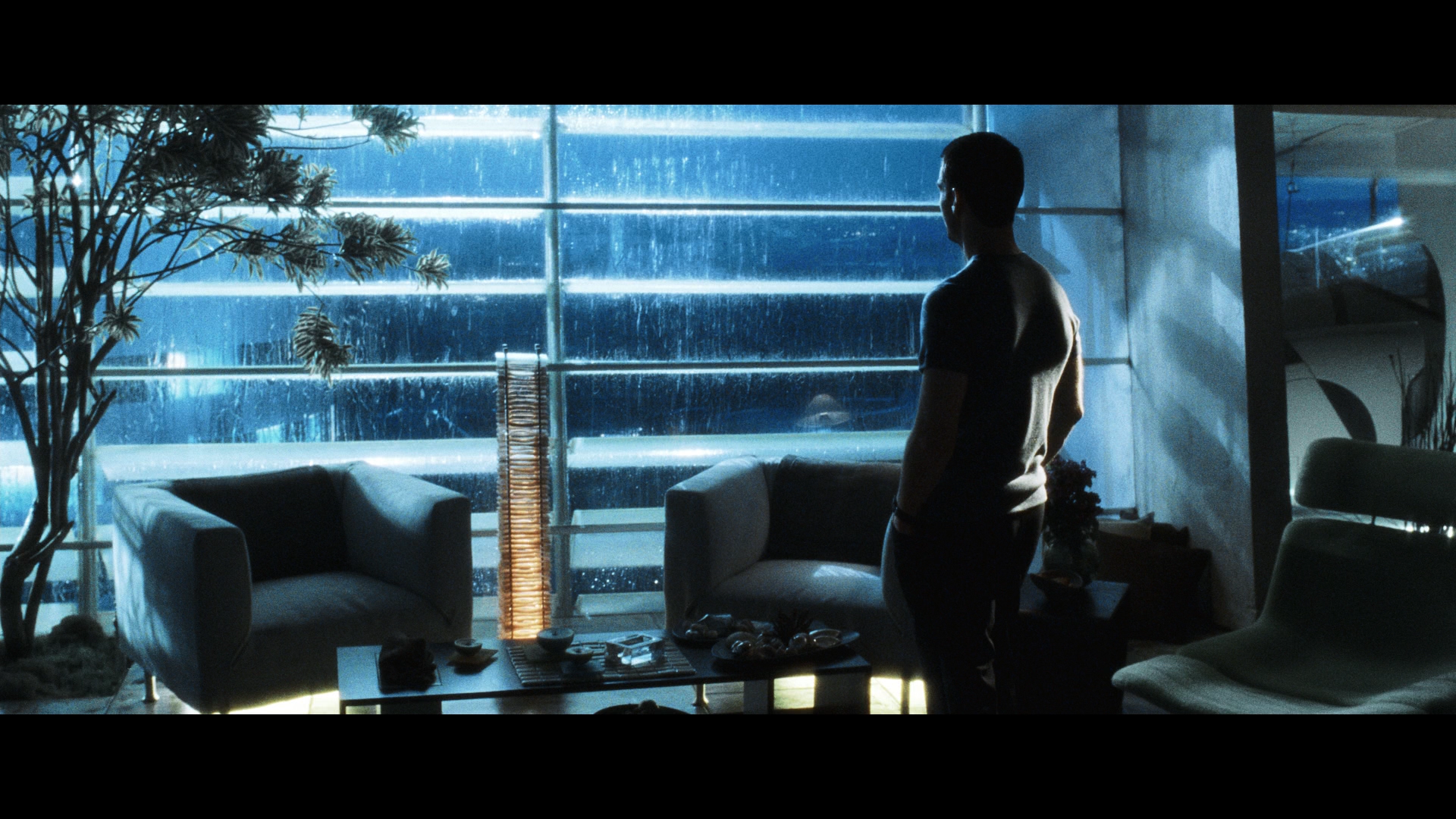

With the email sent—somehow—you head to the kitchen, reach into the food replicator, and retrieve a mug of coffee (the replicator produced both the coffee and the mug, the latter in a manner similar to contemporary 3D printing). You never verbally requested coffee, much less did anything so archaic as pressing buttons, and the machine could not have assumed you wanted coffee since you just as often have either tea or juice. There are too many options for the replicator to have guessed, yet it got it right. You walk into your dining room and sit down, where you find that breakfast is already waiting for you. Although you enjoy a variety of breakfast options, the spread of eggs and toast lay before you is precisely what you wanted today, not cereal, which you eat just as often, not a waffle, not a scone. Your smart house got it right. You realize that you wish the coffee was a bit sweeter and then become aware of a plate of sugar cubes in your peripheral vision on the table. Was it there the whole time, or did the matter replicators built into the table rapidly emit it onto the table’s surface as soon as you felt the desire for additional sugar? Which came first, your realization that you wanted sugar, or the plate of sugar itself? How can the latter be possible? You notice the ceiling light dimming automatically, and then realize it was a bit too bright a moment before—in that order. As you take the first heavenly sip from the mug, you hear Rossini start to pipe through the audio system and then realize that was precisely what you wanted to listen to on this particular morning while you ate your breakfast. Did you only decide you wanted to listen to Rossini because you heard it? If so, how did the house know to play it in the first place? No, rather, the house seems to have known you slightly before you knew yourself.

Your environment not only seems to meet your needs without verbal direction, essentially reading your mind, it seems to do so conspicuously before you are aware of your own desires. By the time you head back to your bedroom to get dressed, the outfit you intend to wear is already front and center in your closet, but you had barely given it a moment’s attention before leaving the dining room. Giving yourself a final check in the mirror you realize that you wish you had a belt with a silver buckle, not brass. Turning to your closet you find such a belt hanging on the hook. You never owned one before, but now it’s there. The matter replicator could not have built it fast enough in response to your preference for a silver buckle, regardless of any potential mind-reading technology, since even your futuristic metal printer requires several seconds to complete a construction. The replicator absolutely must have begun its construction a few seconds earlier, before you even realized you were distressed with the appearance of the brass buckle in the mirror.

You can construct vast bodies of text faster than you can think of them. Gone are the ancient days of penmanship and typing. Even dictation, which steadily replaced—or at least augmented—keyboard typing in certain domains as the twentieth century unfolded, is now completely outdated. Rather, while staring at a blank text editor on your computer monitor, you see the words flood across the page faster than you can think of them. They don’t even feel like your words because they lead instead of follow your intentions, yet the text emerging from the white background is precisely what you sat down to accomplish. Any edits or changes you conceive along the way similarly materialize on the screen faster than you can grasp control of them. The whole body of text seems to grow organically without your direction, not following your inner voice, but seemingly a moment or two ahead of your own conscious awareness. Yet while it doesn’t feel like the text belongs to you, the final document is precisely what you set out to accomplish. It must be yours. Who else’s could it be?

How can any of this be possible? At every turn, not only are you able to achieve your goals without directly expressing them verbally or otherwise, but more astoundingly, your goals are met before you knew you had them. It is an impressive feat in itself that your environment can respond to your mental intents without your having to incantate them, but it is a wholly different experience that such tools are apparently a step ahead of your own conscious awareness. The whole world appears to be in a state of perpetual physical flux as futuristic matter converters constantly reshape your environment not only to your wants and needs, not even only in a seemingly mind-reading fashion, but somehow anticipating each desire slightly before you actually experience the desire. Consequently, the world always seems to be magically ready for you at every turn.

Try to wrap your mind around how weird this experience would be. Every time you wish for some physical object you conveniently find it sitting on your desk by the time you realize you need it. You see your matter converters in a state of constant activity tossing out (and recycling) objects faster than you can ask for them, and always emitting something you only just realized you were about to want at the very moment it appears before you. This happens ceaselessly throughout the day. The whole world simply conforms to your desire.

Research suggesting that our choices and decisions are already made significantly in advance of our conscious awareness of those choices goes back as early as the 1980s. Benjamin Libet’s experiments are widely regarded as the initial foray into the discovery that neurological indications of a decision precede the subject’s conscious awareness of their own decision by hundreds of milliseconds. Modern variants of the experiment, using fMRI, have pushed the window of time during which brain scans can anticipate a subject’s decision to as long as ten seconds in some cases. In Libet’s experiment, subjects freely choose the exact time at which to take some action, such as lifting a finger, yet the event can be predicted in advance by observing the EEG of the subject. Other experiments, such as those by Roger Koenig-Robert and Joel Pearson, consist of choosing between two images to focus on. Again, brain scans, this time fMRI, can anticipate the choice. Yet another experiment, by John-Dylan Haynes, has the subject choose between pressing a button with their left or right hand. And again, the choice can be anticipated far in advance.

When the implications of these experiments are considered, the discussion generally wanders into the morass of free will, attempting to determine if we still have free will in light of such experiments, or alternatively if our conscious intention (our volition) doesn’t seem to be the determinant of our choices and actions. I have no interest in pursuing the implications for free will here. If you are otherwise curious about my take on free will, please read my book.

This article merely explores the end result of such research in terms of practical applications. Other attempts to speculate on the value of such discoveries are, frankly, rather mundane, considering the possibility of more accurate lie detectors and such. Is that really the best we can come up with? As illustrated above, in a slightly more distant future, a confluence of technologies may enable some remarkable technologies. It will require unintrusive, efficient, reliable brain scanners, preferably wearable or implantable. Nothing about the story described above would work with a gargantuan fMRI machine. Scanners of the requisite sort are far off indeed. For the text construction example, practically no other technology will be necessary, but it is no small feat to extend the rudimentary experiments above to a version that can read your inner voice as a stream of text from a brain scan, much less do so far ahead of your otherwise prosaic dictation capabilities, which are already feasible today for the mere cost of a microphone. Furthermore, we honestly don’t know which particular mental events offer which possible lead-times of anticipatory neural activity. While binary forced choice experiments may offer lead times of several seconds, perhaps text generation only leads by a much smaller window, but maybe that will be sufficient for a rich user experience anyway. We don’t know these things yet. Moving on, the physical examples above will require steady advances in 3D printing, and in the culinary examples, may require at the least a capable kitchen robot, and in a more extended imagining, a biomatter printer or rapid chemistry machine of some sort that we needn’t delve into here. In the extreme, something like utility fog as described by John Storrs Hall may be used, from which nanobots coalesce from the very air around us to construct objects on a whim (and just as easily dissolve those objects the moment we no longer need them). Doubtlessly, each of these technologies presents serious challenges, and the scenario described above will not be possible in the near future. But there is good reason to expect some of the possibilities described above to emerge in some fashion in our coming future.

This article was typed out, letter by letter, on a 2019 Mac, although I may attempt dictating it after I am done, just for practice.

Keith Wiley is the author of A Taxonomy and Metaphysics of Mind-Uploading, available on Amazon. He is a board member with Carboncopies.org, which promotes research into whole brain emulation, and a fellow with The Brain Preservation Foundation, which promotes research into brain preservation technologies. Keith holds a PhD in computer science from the University of New Mexico and works as a data scientist in Seattle. His website is http://keithwiley.com.